Light. What actually is light? Light is an electromagnetic radiation of any wavelength. Light has very interesting properties. It has both properties of a particle and a wave. Light is also represented as a photon, a massless elementary particle which is a carrier of electromagnetic force.

There are many ways on how photons can be generated. It can be from a flashlight, a candle, an LED, or thermal source or a black-body, like our sun. Light sources, may generate photons of different frequencies or wavelengths. Some of this wavelengths are in the visible region of the electromagnetic spectrum. This is the reason why we see colors from light sources like a tungsten-halogen lamp which is orangey in color.

The objective of this article is to show different emittance spectra of different light sources and also to show the emittance of an idealized black-body at the visible region in different temperatures.

Light Emitting Diode

Light Emitting Diodes or LEDs are common nowadays. Due to the development on semiconductor technology, production of these light source devices came cheap. It also requires low power for operation which why it is now commonly used as flashlights and sometimes in portable lasers.

In Figure 1, we can see the emittance spectra obtained from a Gallium nitride based LED flashlight. Figure 1a is the emittance spectrum obtained from our experiment while Figure 1b shows the spectrum obtained from another experiment.

(a)

(b)

Figure 1. Emittance spectra of GaN based LED flashlight. (a) Our experimentally obtained emittance spectrum and (b) the emittance spectrum obtained from a different experiment of the white LED.

As we can see both the experimentally obtained spectra are similar to each other. Both have peaks near the 450nm which represents the GaN emittance peak and a broadband emittance from 500nm to 650nm which corresponds to the Ce:YAG in the device. We can see that even though we see it as a white light it does not have an equal distribution among the wavelength values.

Butane lighter

Butane is a highly flammable, colorless gas that can be easily liquefied. It is used for most lighters that are commercially available. Light is produced from the lighter by free radical reaction, specifically combustion.

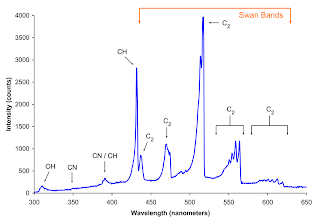

In Figure 2, we can see the experimentally obtained emittance spectra of the butane lighter.

(a)

(b)

Figure 2. Emittance spectra of a butane lighter. (a) Our experimentally obtained emittance spectrum and (b) emittance spectrum obtained from another experiment.

We can see that Figure2a and Figure2b are different from each other. This is because the experiment in Figure 2b was done in a oxygen rich environment. The butane burns blue due the abundance of oxygen in its surrounding. While in Figure 2a, the experiment was done in a normal room condition and the butane lighter burns orange instead of blue. However, the peak locations of our experimentally obtained spectrum was close the peak values of the one in Figure 2b. This was still expected because the same chemical reaction are still taking place and the energy barriers that must be overcome for combustions are still the same.

The following part show the emittance spectra of both laptop LCD and a projector displaying white, red, green, and blue. From here we will observe the behavior of the laptop LCD and the projector while displaying the said colors.

White

In Figure 3, we can see the emittance spectra of both the LCD and the projector. We can see that the two spectra are very different.

(a)

(b)

Figure 3. Emittance spectra of both (a) laptop LCD and (b) projector displaying a white color.

It can be observed that the LCD emits more yellow than blue wavelengths. The opposite is observed for the projector, minimal emittance on the yellow to red wavelengths is observed while the blue to violet wavelengths are emitted significantly. The only similarity is that both are emitting significant amount of green wavelengths.

Red

Now we let both the LCD and the projector display a red color. The emittance spectra of the two are shown in Figure 4.

(a)

(b)

Figure 4. Emittance spectra of both (a) laptop LCD and (b) projector displaying a red color.

The result of the experiments was expected. Since both are displaying a red color it was expected that the emittance spectra will have high values in the red to yellow region and minimal emittance on other regions. However for the LCD, we can see that it emitted a significant amount of green-yellow wavelength.

Green

Again, the LCD and projectors are made to display a green color. Figure 5 shows both the emittance spectra of the two.

(a)

(b)

Figure 5. Emittance spectra of both (a) laptop LCD and (b) projector while displaying a green color.

Again, the emittance spectra was expected to have high values on the green to near green wavelengths. However, the LCD has discrete emittance on the blue and yellow wavelengths while the projector has broadband emittance from the blue th near yellow wavelengths.

Blue

The LCD and projector was lastly made to display a blue color. Figure 6 shows the emittance spectra of both of the displays.

(a)

(b)

Figure 6. Emittance spectra of both (a) laptop LCD and (b) projector while displaying a blue color.

Again, the high value in both of the spectra was expectedly observed at the blue region. But again, discrete emittance spectra was observed at the green wavelength on the LCD and a broad spectra was observed at the blue region, which was expected. For the projector, a peak was observed at the blue violet region and a small distribution was observed at the blue region.

Black-body Radiation

A black-body is an idealized object in Physics. It absorbs all the of the electromagnetic radiation and then re-emit the radiation in a continuous characteristic spectrum. The peak of this unique spectrum is dependent on the temperature of the black-body. For light with shorter wavelength, the frequency would be high. Correspondingly, high frequency means high energy and high energy means high thermal energy of the black-body. That is why an ideal black body appears blue when it is very hot and appears red when it is relatively cold. The chromaticity of the black-body for different temperature is shown in Figure 7.

Figure 7. Chromaticity of a black-body at different temperatures.

The spectrum of the black-body at a specific temperature is given by the Planck's black-body radiation shown below.

From the equation above, h is the Planck's constant, k is the Boltzmann constant, and c is the speed of light in a vacuum. With the Planck's black-body radiation, we can get the emittance spectrum of a black-body given its temperature.

In Figure 8a shows the spectrum of an ideal black-body in the visible region that has temperature ranging from 4000K to 7000K. We can see that as the temperature rises, the peak emittance of the black-body became much more bluish.

(a)

(b)

Figure 8. Spectral energy density of an ideal black-body at different temperatures. (a) Spectral energy density of a black-body that has temperature ranging from 4000K to 7000K in the visible region. (b) Spectral energy density of a black-body that has temperature ranging from 1000K to 7000K displayed in a much wider wavelength values. The temperature of each black-body is placed near the peak value of the spectrum.

Figure 8b shows the spectrum of the black-body that has temperatures ranging from 1000K to 7000K displayed in a much wider wavelength values. We can see that at 1000k, the peak value of the spectrum is observed at 3000nm, at the infrared region. This explains why at room temperature, the black-body is black in color. Since we can't see infrared wavelengths, we do not see the emission of the black-body.

Figure 9. Spectral energy density of a black-body at room temperature.

In Figure 9, we can see the spectral energy density of the black-body at room temperature. It can be observed that the peak emittance of the ideal black-body was found at 10000nm, at a much longer wavelength. This clearly explains why a black-body appears to be black at room temperature!

In summary of this article, we have shown different spectra of light sources and compared it with values that are accepted. Also, behavior of light sources that can display different colors was investigated at different display colors. Lastly, the emittance spectrum of an ideal black-body was investigated at different temperatures and an explanation of why a black-body appears to be black at room temperature was provided.

References: